Deploy your Siri clone offline, on your phone

Lesson 4: An everyday companion right in your pocket!

🚨 Alert. This article is dangerously practical; no AI buzzword bingo here. Oh, and did I mention? We have CODE. 🧑💻

Oh, and one more thing… we’re on 🔗 LinkedIn! Follow for snippets of knowledge.

We are back for the last lesson of the 4-part course “Build Your Own Siri”. For today, we have the most exciting part of them all - drum rolls 🥁… - Deployment on Edge.

What we’re used to is running inference on the cloud, wrapped in an API consumed by a web application. It’s pretty, online, and serves millions. But we’re going to show you the exact opposite: running inference fully locally, offline, on edge.

If you’ve missed it, here’s Lesson 3 of this course:

Fine tune your own Siri

🚨 Alert. This article is dangerously practical; no AI buzzword bingo here. Oh, and did I mention? We have CODE. 🧑💻

Check out the full course here:

Table of content

Preparing the environment

Edge deployment

Testing the final outcome

1. Preparing the environment

Even though we are developing a Siri-like application, I chose to deploy it on an Android device rather than an Apple. That’s because of the iOS system and constraints that do not have room to wiggle. Instead, we chose to deploy our application on an Android device so we can complete the use case.

The IDE of choice is Android Studio, it's specifically designed for Android applications and it’s extremely versatile. You can download it here if you don’t have it.

So, go ahead and get started on a new project!

Once you've got your project up and running, the first thing you'll need is a project structure and all the dependencies installed. I put a lot of thought into how to structure this, and I'm really happy with how it turned out!

BuildYourOwnSiri/

├── app/

│ ├── src/

│ │ ├── main/

│ │ │ ├── assets/

│ │ │ │ └── ggml-tiny.en.bin

│ │ │ │ └── unsloth.Q2_K.gguf

│ │ │ ├── cpp/

│ │ │ │ └── llama

│ │ │ │ └── whisper

│ │ │ │ └── native-lib.cpp

│ │ │ ├── java/com/example/byos/

│ │ │ │ ├── MainActivity.kt

│ │ │ │ ├── FunctionHandler.kt

│ │ │ │ ├── FunctionRegistry.kt

│ │ │ │ └── NativeBridge.kt

│ │ │ ├── res/

│ │ │ │ ├── layout/

│ │ │ │ └── activity_main.xml

│ │ │ └── AndroidManifest.xml

│ │ └── CMakeLists.txt

│ └── build.gradle.kts

├── gradle

├── build.gradle.kts

├── gradle.properties

├── settings.gradle.kts

├── gradlew

├── gradlew.bat

└── README.mdOK! Before we start coding, let's first initialize the CMake file and the Gradle files, then clone the repos for Whisper and Llama.

For the CMake file we build native-lib as a shared library and pull llama.cpp and whisper.cpp as subdirectories. We force CPU-only ggml, enable OpenMP (via libomp), disable GPU backends, reuse ggml between both projects, and pass -fno-finite-math-only (required by ggml).

Note: On Android 15+ we also set 16 KiB page-size link options to avoid loader issues.

Everything in the Gradle files remains as it was when the project began, with only minor modifications to build.gradle.kts, such as using externalNativeBuild (CMake) and targeting only arm64-v8a. The ABI and CMake arguments are also passed consistently.

As for the content of the files, you can find them on GitHub.

The next step is to clone the Whisper and LLaMA repos.

To do this, we need to move the upstream repositories into the native folder of the Android module. Place ggerganov/llama.cpp in app/src/main/cpp/llama and ggerganov/whisper.cpp in app/src/main/cpp/whisper.

These are the exact paths that the add_subdirectory command in CMake expects.

cd app/src/main/cpp

git clone --depth=1 https://github.com/ggerganov/llama.cpp llama

git clone --depth=1 https://github.com/ggerganov/whisper.cpp whisperNow that the environment is ready and everything is set up, let’s get to coding!

2. Edge deployment

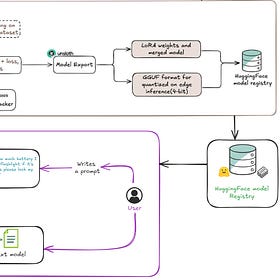

Before we start implementing, we need to make a small change to our notebook from the previous course.

Unfortunately, the application does not support 4-bit quantization because the limit is 4 GB and our 4-bit quantized model is 4.9 GB. To solve this problem, we used a 2-bit quantized model that had a size of 3.2 GB, which is compatible with our requirements.

However, the responses will be inaccurate, and I want you to be aware that this is a constraint of using such a small model. Nevertheless, it can get the job done…

Prompt:

“Open the flashlight”

Response:

Set battery status to get battery status. Play track “Viva La Vida”. Set volume to 90%. Create note “Groceries” with content.`

🦗

🦗🦗

Yes… almost perfect!😅

I promise you that it gets it right sometimes!

This is the change we did in the notebook:

model.push_to_hub_gguf(

repo_id="YourProfile/NameForTheModel",

tokenizer=tokenizer,

quantization_method=["q2_k", "q3_k_s", "q3_k_m"],

token=hf_token,

)It's not that complez and you don't even need to run the whole code; just use the 'FastLanguageModel.from_pretrained' function from Unsloth to load your most recently trained model. After that, you just need to run this command:

!curl -fsSL https://ollama.com/install.sh | shThis will install Ollama, after which you can quantize the model.

And there you go — you now have a 2-bit quantized model!

Now, we can finally get to deploy on edge.

To do this, we need to install our GGUF file locally and add it to the main/assets folder, as well as the BIN file from Whisper Tiny model. This will enable us to use Siri 100% locally, with no internet connection required.

Next we implement the Siri application, and to help you understand each file, I've structured it so that you can see what each one does.

NativeBridge

This file is the JNI bridge between the C++ core (native-lib.cpp) and the Kotlin app (MainActivity.kt). It loads the native library and declares the C++ functions as external, so MainActivity can call them directly from Kotlin.

package com.example.byos

object NativeBridge {

init {

// Load only our native library (stub implementation)

System.loadLibrary("native-lib")

}

fun load() = Unit // call this in onCreate() to trigger the init block

external fun initWhisper(modelPath: String): Boolean

external fun transcribe(audioPath: String): String

external fun initLlama(modelPath: String): Boolean

// Async methods

external fun initAsyncCallback(callback: LlamaCallback): Boolean

external fun runLlamaAsync(prompt: String): Boolean

external fun cleanupCallback()

// Cleanup method

external fun cleanup()

}

interface LlamaCallback {

fun onLlamaResult(result: String)

}MainActivity

This is where all the app flow takes place. Here we wire up the UI, request mic/storage permissions, locate the local model files, and initialize the on-device Whisper (ASR) and LLaMA (reasoning) engines via NativeBridge. We also register the JNI callback to receive LLaMA results and trigger the record → transcribe → tool-call execution loop.

FunctionRegistry

The FunctionRegistry defines all the functions that the model can call. Each function is described by its name, purpose and parameters, and then exported as JSON for the native side. This enables LLaMA to identify the available actions and allows Android to execute them safely through the FunctionHandler.

package com.example.byos

import com.google.gson.Gson

object FunctionRegistry {

data class ParamSchema(

val type: String,

val properties: Map<String, Map<String, String>> = emptyMap(),

val required: List<String> = emptyList()

)

data class Tool(

val name: String,

val description: String,

val parameters: ParamSchema

)

private val tools: List<Tool> = listOf(

Tool(

name = "toggle_flashlight",

description = "Turns on the device flashlight if available.",

parameters = ParamSchema(type = "object")

),

//... The remaining functions

@JvmStatic

fun toolsJson(): String = Gson().toJson(tools)

}FunctionHandler

The FunctionHandler contains all the functions that LLaMA can call and runs them on the device. Here, we have defined functions such as toggle_flashlight, which turns on the flashlight, and get_battery_status, which shows your battery level and whether it is charging or not.

package com.example.byos

import android.app.ActivityManager

import android.content.Context

import android.content.Intent

import android.media.AudioManager

import android.net.Uri

import android.os.BatteryManager

import android.os.Build

import android.hardware.camera2.CameraManager

import android.hardware.camera2.CameraCharacteristics

import java.io.File

import java.io.IOException

object FunctionHandler {

/**

* Dispatches a function call parsed from the LLM.

* Returns a user-friendly result string.

*/

fun dispatch(context: Context, name: String, args: Map<String, String>): String {

// Use the exact function name from the AI response

return when (name) {

"toggle_flashlight" -> runCatching {

val cameraManager = context.getSystemService(Context.CAMERA_SERVICE) as CameraManager

val cameraIds = cameraManager.cameraIdList

for (cameraId in cameraIds) {

val characteristics = cameraManager.getCameraCharacteristics(cameraId)

val hasFlash = characteristics.get(CameraCharacteristics.FLASH_INFO_AVAILABLE) == true

if (hasFlash) {

// Note: This is a simplified toggle - in a real app you'd track state

cameraManager.setTorchMode(cameraId, true)

return "✅ Flashlight turned on"

}

}

"❌ No flashlight available on this device"

}.getOrElse { "❌ Failed to control flashlight: ${it.message}" }

"get_battery_status" -> runCatching {

val bm = context.getSystemService(Context.BATTERY_SERVICE) as BatteryManager

val level = if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP) {

bm.getIntProperty(BatteryManager.BATTERY_PROPERTY_CAPACITY)

} else -1

val charging = bm.isCharging

"Battery: $level%, Charging: $charging"

}.getOrElse { "Failed to get battery status: ${it.message}" }

//... Other functions

}

}

}native-lib

The native-lib.cpp file is where the heavy processing happens using C++. Here we set up and run the Whisper model for audio transcription and the LLaMA model for text generation.

These native functions are exposed through JNI, allowing them to be called from Kotlin. The file also handles model initialization and cleanup, and sends results back to MainActivity through callbacks.

The Kotlin files control the flow and user interaction, while this file acts as the core engine of the app.

The transcription process involves running Whisper locally. When you call the 'transcribe(path)' function in Kotlin, the native code loads the locally saved audio file from your input, feeds it into Whisper and returns the transcribed text.

extern "C" JNIEXPORT jstring JNICALL

Java_com_example_byos_NativeBridge_transcribe(JNIEnv *env, jobject, jstring audioPath) {

if (!wctx) {

return env->NewStringUTF("⚠️ Whisper not initialized");

}

std::string path = jstr2std(env, audioPath);

// Load and validate audio file format

std::vector<float> pcm_data;

WavInfo winfo;

if (!read_wav_mono_s16_16k(path, pcm_data, winfo)) {

return env->NewStringUTF("Unsupported audio format. Record mono 16-bit PCM @16kHz (WAV)");

}

// Configure Whisper for fast speech recognition

whisper_full_params wparams = whisper_full_default_params(WHISPER_SAMPLING_GREEDY);

wparams.n_threads = std::max(2, (int)std::thread::hardware_concurrency() - 1);

wparams.no_timestamps = true;

wparams.single_segment = true; // Process as single segment (faster for short audio)

wparams.no_context = true; // Don't use context from previous segments

wparams.max_len = 64; // Limit output length for voice commands

wparams.translate = false;

wparams.print_progress = false;

wparams.print_realtime = false;

wparams.print_timestamps = false;

// Run speech recognition

if (whisper_full(wctx, wparams, pcm_data.data(), pcm_data.size()) != 0) {

return env->NewStringUTF("⚠️ Whisper inference failed");

}

// Extract transcribed text from all segments

const int n_segments = whisper_full_n_segments(wctx);

std::string result;

for (int i = 0; i < n_segments; ++i) {

const char* text = whisper_full_get_segment_text(wctx, i);

if (text) {

result += text;

}

}

if (result.empty()) {

result = "";

}

return env->NewStringUTF(result.c_str());

}When it comes to the LLaMA inference stage, we take the user's input and add it to a predefined system prompt that looks like this:

"SYSTEM: You are a function calling assistant. Respond ONLY with valid JSON.\n\nFORMAT: [{\"name\": \"function_name\", \"arguments\": {}}]\n\nFUNCTIONS: " + tools_json_str + "\n\nRULES:\n- ONLY output JSON\n- NO explanations\n- NO other text\n\nUSER: "This prompt provides the AI with instructions on what to generate, offering a description of all the available functions. The prompt is then given to the AI, which generates the output:

const int base_pos = n_full; // Starting position for generation

// Initialize greedy sampler (always picks most likely token)

llama_sampler* sampler = llama_sampler_init_greedy();

if (!sampler) { LOGE("failed to create sampler"); callJavaCallback("[]"); return; }

auto t0 = std::chrono::steady_clock::now();

auto timed_out = [&]{

using namespace std::chrono;

return duration_cast<seconds>(steady_clock::now() - t0).count() > 15; // Timeout to prevent hanging

};

std::string out; // Accumulate generated text here

// Generate tokens one by one until we have complete JSON

try {

for (int i = 0; i < g_max_tokens; ++i) {

if (timed_out()) { LOGI("timeout after %d tokens", i); break; }

// Sample next most likely token

llama_token id = llama_sampler_sample(sampler, g_llama_ctx, -1);

if (llama_vocab_is_eog(g_vocab, id)) break; // End of generation token

// Convert token to text and add to output

char buf[256];

int n = llama_token_to_piece(g_vocab, id, buf, sizeof(buf), 0, true);

if (n > 0) out.append(buf, n);

// Feed the generated token back into the model for next prediction

llama_batch genb = llama_batch_init(1, 0, 1);

genb.n_tokens = 1;

genb.token[0] = id;

genb.pos[0] = base_pos + i; // Position in sequence

genb.n_seq_id[0] = 1;

genb.seq_id[0][0]= 0;

genb.logits[0] = 1; // We need logits for next prediction

if (llama_decode(g_llama_ctx, genb) != 0) {

LOGE("decode failed at gen step %d", i);

llama_batch_free(genb);

break;

}

llama_batch_free(genb);

// Check if we've generated complete JSON - stop early to prevent garbage

if (out.find("[{\"name\"") != std::string::npos && out.find("\"arguments\"") != std::string::npos) {

// Count braces and brackets to check for complete JSON

int open_braces = 0;

int open_brackets = 0;

for (char c : out) {

if (c == '{') open_braces++;

if (c == '}') open_braces--;

if (c == '[') open_brackets++;

if (c == ']') open_brackets--;

}

// If we have balanced braces/brackets and end with ']', we have complete JSON

if (open_braces == 0 && open_brackets == 0 && out.back() == ']') {

break;

}

}

}

} catch (const std::exception& e) {

LOGE("runLlamaAsync: exception during sampling: %s", e.what());

out = "[]";

} catch (...) {

LOGE("runLlamaAsync: unknown exception during sampling");

out = "[]";

}

// Clean up sampler

llama_sampler_free(sampler);

// Clear KV cache so next request starts fresh

llama_memory_t mem = llama_get_memory(g_llama_ctx);

if (mem) {

llama_memory_seq_rm(mem, /*seq_id=*/0, /*p0=*/0, /*p1=*/-1);

}

LOGI("runLlamaAsync: RAW OUTPUT: '%s'", out.c_str());If the model produces tool calls in JSON format, they are passed back to Kotlin for execution. However, if they are not in JSON format, there is a fallback implementation that still provides the correct answer. This is mainly used to address the model's hallucination problem.

// Ensure we return valid JSON even if empty

if (out.empty()) out = "[]";

// Fallback: if model generated natural language instead of JSON, use keyword matching

if (out.find("[{\"name\"") == std::string::npos ||

out.find("You can use") != std::string::npos ||

out.find("Your task is") != std::string::npos ||

out.find("sequence of") != std::string::npos ||

out.find("commands necessary") != std::string::npos ||

out.find("NOT A PLAN") != std::string::npos ||

out.find("UTTERANCE") != std::string::npos) {

LOGI("runLlamaAsync: Using keyword fallback");

// Use simple keyword matching to determine user intent

std::string lower_input = user_input;

std::transform(lower_input.begin(), lower_input.end(), lower_input.begin(), ::tolower);

if (lower_input.find("flashlight") != std::string::npos || lower_input.find("light") != std::string::npos || lower_input.find("torch") != std::string::npos) {

out = "[{\"name\": \"toggle_flashlight\", \"arguments\": {}}]";

} else if (lower_input.find("battery") != std::string::npos || lower_input.find("power") != std::string::npos) {

out = "[{\"name\": \"get_battery_status\", \"arguments\": {}}]";

} else if (lower_input.find("volume") != std::string::npos || lower_input.find("sound") != std::string::npos) {

out = "[{\"name\": \"set_volume\", \"arguments\": {\"level\": 50}}]";

} else if (lower_input.find("music") != std::string::npos || lower_input.find("play") != std::string::npos) {

out = "[{\"name\": \"play_music\", \"arguments\": {\"track_name\": \"default\"}}]";

} else if (lower_input.find("close") != std::string::npos && lower_input.find("app") != std::string::npos) {

out = "[{\"name\": \"close_application\", \"arguments\": {\"app_name\": \"current\"}}]";

} else {

// Default fallback

out = "[{\"name\": \"get_battery_status\", \"arguments\": {}}]";

}

}Now it comes to the callback function. This gets the output. It then gives it back to Kotlin. Kotlin can then use it.

void callJavaCallback(const std::string& result) {

if (!g_jvm || !g_callback_obj || !g_callback_mid) {

LOGE("callJavaCallback: missing callback setup");

return;

}

JNIEnv* env;

int getEnvStat = g_jvm->GetEnv((void**)&env, JNI_VERSION_1_6);

if (getEnvStat == JNI_EDETACHED) {

if (g_jvm->AttachCurrentThread(&env, nullptr) != 0) {

LOGE("callJavaCallback: failed to attach thread");

return;

}

} else if (getEnvStat != JNI_OK) {

LOGE("callJavaCallback: failed to get JNI env");

return;

}

// Check if callback object is still valid

if (!g_callback_obj) {

if (getEnvStat == JNI_EDETACHED) {

g_jvm->DetachCurrentThread();

}

return;

}

jstring jresult = env->NewStringUTF(result.c_str());

env->CallVoidMethod(g_callback_obj, g_callback_mid, jresult);

env->DeleteLocalRef(jresult);

if (getEnvStat == JNI_EDETACHED) {

g_jvm->DetachCurrentThread();

}

}And voilà! We have a local Siri app that works offline. Let's try it out!

P.S. And here is the GitHub repo so you can do this too!

3. Testing the final outcome

Now that we have a fully usable, locally built application, we can test it and see what happens.

As I mentioned at the beginning, the model is hallucinating and providing inaccurate responses, but the application's responses are managed through the fallback function, which ensures legitimate and accurate responses at all times.

For this part, I have made a short video showing how the application works:

Yes, I know it's slow, but only for now.

In the future, there will be available models that can achieve better results with less space, which will be a significant advancement.

For this reason, I'd like to invite you to read our article, ‘Beyond the Cloud: Why the Future of AI Is on the Edge’, in which we provide important information about why the future of AI will be local and not require an internet connection.

Check it out here:

Wrapping Up

If you’ve followed this far (drop a 🔥 in the comments, it’s been a journey), you've gone through the complete process of building a fully local, fully offline function-calling assistant from scratch. By this point, you should have:

Knowledge of how to prepare your dataset for function calling.

Knowledge of how to fine-tune and quantize LLaMA 3.1 8B using Unsloth.

Knowledge of how to deploy your GGUF file on edge, enabling you to have a Siri-like application on your phone.

Coming Up Next: Bonus course 🚨

TO BE CONTINUED!

But still…

It’ll be hands-on.

It’ll include real code, real configs, and real gotchas.

And now is the time to be on edge, not on the edge!

🔗 Check out the code on GitHub and support us with a ⭐️