Beyond the Cloud: Why the Future of AI Is on the Edge

How Smart Devices Are Becoming Our Most Trusted Everyday Companion.

In the field of AI, data and memory are the most important factors.

Without these two, you can’t achieve anything of value.

That is why the toughest challenges show up at the edge, where storage is capped by the hardware itself. The catch is that the most impressive AI models are also the most memory-hungry.

But here's the twist: the devices we use the most, mobile phones, tablets, wearables, even smart cameras and cars, are edge devices. And they are no longer just secondary tools.

Take your phone, for example. It is already running AI every day: from real-time photo enhancement and voice assistants to background noise cancellation during calls or even suggesting replies before you start typing. AI is already running on our most personal hardware.

It makes sense because we spend more time on our phones than on our laptops. Global trends show mobile devices now drive the majority of online activity.

So why not bring AI closer to where people actually are?

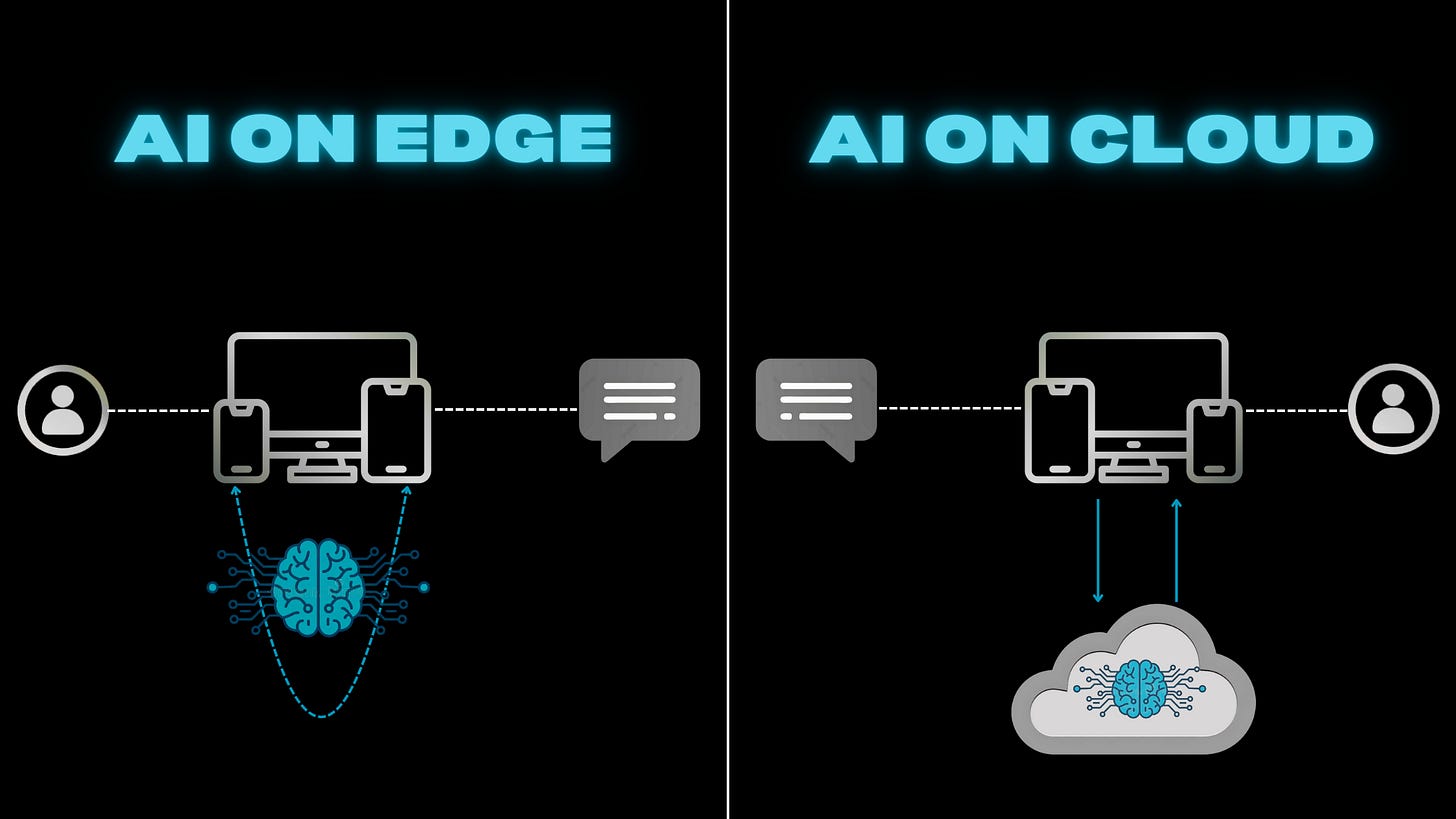

But running AI on an edge device is nothing like running it in the cloud. There is no endless memory and no high-powered GPUs. Every task has to be tuned for battery life, storage limits, and instant speed.

That’s where edge innovation is happening now, not in building bigger models, but in making smarter, faster, lighter ones that fit in your pocket and are available offline 24/7.

Key takeaways

Instant insight, no cloud detour required. There's no need to wait — everything is available straight away on the Edge.

Industries need AI in their machinery. If we're talking about industrial robots, army airplanes or tanks, everything needs to be upgraded so that they don't fall behind. The cloud doesn't come in handy here.

Don't be on the edge. Be on edge. The real impact will be made if you are ready to make a difference and adapt to the future.

What is happening in the industry now?

The rise of AI is clear to all of us. In recent years, technological advancements have made it possible to incorporate AI into cameras, phones, cars, and even airplanes, making our lives easier.

Now, the industry is emerging in creating smaller models that can easily be placed on small devices, such as Llama 3.1 8B/Llama 3 8B-Instruct (Meta) and Gemma 2B/Gemini Nano (1.8B & 3.5B) (Google), and others.

Or to integrate AI inside the phone, eliminating the need for the cloud, like Apple's on-device AFM (~3B), which is part of "Apple Intelligence" and powers handwriting recognition, Live Translate in FaceTime, and system-wide writing suggestions without internet.

Or that Samsung's Galaxy S25 ships with Gauss 2 Compact (≈4B) plus a local slice of Gemini Nano that can handle heavier workloads.

AI in phones is all anyone seems to talk about lately. The hype is everywhere — new launches, flashy demos, bold promises of “smarter than ever” devices.

A perfect example? People are buying the new iPhone 16 purely because it “has AI”. Everyone is convinced it’s a game-changer.

But just a week later, reviews started rolling in… and they weren’t exactly flattering. It felt exactly like the old iPhone 15, same photos, same apps, same speed.

The only real upgrade? The hype.

The problem is that there aren't many resources on how to work with AI in real and important situations. There's plenty of information on buzzwords and how to implement an LLM on your device in 15 minutes, but not on how to solve real-life problems.

How is it used?

When we talk about AI on edge devices, we are not just talking about large language models (LLMs). We are also talking about computer vision (CV), which powers everything from autonomous driving to catching shoplifters, and even simple machine learning (ML) algorithms that flag suspicious calls as spam.

The possibilities are expanding fast. AI on the edge will not only get better results but will also open the door for integration into devices we never thought could run AI locally, from basic home appliances to tiny wearables.

And we are just at the beginning of that journey.

For now, let’s move on to the next question:

When should we use it?

AI on the edge isn’t just about running flashy models on local devices — it’s about solving real problems. You don’t just drop an LLM onto a gadget and call it a day. The real wins come when edge AI addresses challenges that actually matter, like:

Cutting costs by reducing the need for constant cloud processing.

Instant responses without the lag of sending data back and forth.

Protecting privacy by keeping sensitive information on the device.

Working offline where connectivity is limited or nonexistent.

Those are some of the problems that can be resolved by implementing a scalable AI on your local machine.

To see why edge AI matters, imagine a vehicle that must detect a sudden obstacle, a pedestrian stepping into the road, or another car swerving ahead, and apply the brakes in under 100 milliseconds. That’s faster than the blink of an eye. On paper, this sounds straightforward, but in practice, it demands a highly optimized convolutional neural network (CNN) running directly on the vehicle’s neural processing unit (NPU). The system has to:

Ingest and preprocess raw video frames from the onboard camera.

Run real-time object detection and classification.

Estimate collision risk based on trajectory predictions.

Trigger the braking system, all without a single round-trip to the cloud.

This is why it is useful to have locally integrated AI that can do all of this without a prior internet connection, because without this integrated AI, an unexpected accident could happen at any time.

Another approach is to have an assistant that lives entirely on your device, with every piece of data it uses processed and stored locally. No server handoffs. No invisible trip to the cloud.

Apple’s Siri on iOS 17 and the new on-device Assistant on Android work like this, listening for ultra-light wake words and running highly optimized language models — in the range of two to three billion parameters — directly on the device’s neural processing hardware.

That means tasks like checking the weather, setting a timer, or drafting a quick message can all be done in milliseconds, without a single byte of your voice leaving the device.

Why does this matter? Because once your speech or personal data leaves your hardware, you lose control over it. Even a harmless query could contain sensitive information like your home address, your work schedule, a credit card number tucked into a reminder, and once it’s in the cloud, it’s exposed to a whole chain of storage systems, logs, and potential attackers.

By keeping AI inference entirely on-device, that information never leaves the secure enclave.

When should we not use it?

Not every problem needs an AI running locally, and forcing it where it doesn’t belong is just asking for trouble.

Sometimes it’s not worth the complexity, and sometimes it’s simply not possible. Edge devices have hard limits: storage space, memory, and raw processing power. Push a massive model — say, something with over 10 billion parameters — onto a small device, and you’ll quickly hit those limits.

In these cases, the cloud is your friend. Offloading the heavy lifting to cloud infrastructure gives you virtually unlimited compute, the ability to scale instantly, and the flexibility to run models far too large for any local processor.

The trick is knowing when the benefits of local AI (speed, privacy, offline use) actually matter, and when the efficiency and scale of the cloud make more sense.

To illustrate this, let’s consider an international law company that must process and synthesize thousands of pages of contracts, regulatory filings, and case law in a single due diligence engagement.

This sounds doable until you realize that summarizing or cross-referencing tens of thousands of tokens requires a 20 B+ parameter model with a 50 K+ token context window, far beyond any mobile or on-prem device.

How would you feel if you had to download a 20 GB model to each lawyer’s laptop, then wait for infrequent app updates just to get the latest legal precedents?

What if regulatory changes came out today, but your on-device AI was last updated months ago?

This is why cloud AI is indispensable for massive multi-document understanding:

Scalable GPU clusters handle massive context windows, continuous model retraining keeps legal language fresh, and integrated pipelines (OCR → vector DB → LLM) deliver briefs in minutes, not days.

Without this cloud-native approach, companies face stalled workflows and increased risk of missing critical clauses.

That's why, when we're talking about lots of data, big models (with over 10 billion parameters) and the need for GPUs, the answer to the question

'Where should we put it?' is 'On cloud'.

What for the future?

AI is no longer just a cloud-bound phenomenon. It is moving into our hands, our homes, and even onto our wrists.

The next generation of LLMs and intelligent agents will not just be bigger.

They will be smarter, faster, and far more efficient.

Advances in edge hardware with high-speed NPUs, expanded on-chip memory, and ultra-low power consumption will allow compact models to rival today’s massive cloud giants in both accuracy and latency.

Imagine an AI that is always on, invisible, and intimately aware of your context. It will translate languages on the fly, monitor your health, and optimize your environment, all without sending a single byte to the cloud.

While some compute heavy tasks will remain the domain of supercomputers, the bulk of everyday intelligence will happen locally, instantly, and privately.

Even more exciting is that devices will learn from you directly. On-device model updates will allow your phone, watch, or home assistant to adapt in real time, growing smarter in a way that is uniquely yours without compromising your privacy.

In short, AI’s future lives on edge.

What’s next for us?

If you’ve been following our publications, you know we’ve been diving deep into Edge AI. Now, we’re taking it a step further. Our next two articles will show you how to build a real function-calling assistant on the edge as part of our four-part series, “Build Your Own Siri.”

But that’s not all. The second article will reveal an audio deepfake detector capable of analyzing phone calls in real time and instantly telling you whether the voice on the other end is real or a deepfake. This is the next chapter in our two-part series, “Deepfake Audio Detection.”

These aren’t just tutorials; they are a hands-on look at the future of AI, showing what’s possible when cutting-edge technology meets everyday devices. If you’ve ever wondered how to bring powerful AI directly into your hands while keeping it private and fast, these articles are made for you.

If you didn’t already read the articles, here are the links to the previous series: