The mind’s keyboard: How Brain2Qwerty is transforming thoughts into text

A deep dive into the most exciting research yet, turning brainwaves into text

🔥 ML Vanguards is Growing! ✨

We are now on 🔗 LinkedIn!

Our goal is to create a hub for AI engineers, CTOs, and AI enthusiasts, where we share cutting-edge insights, industry trends, and exclusive content beyond our Substack.

Imagine if your thoughts could type themselves with no keyboards, no swipes, just pure, unfiltered ideas turning into text. Sounds like sci-fi, right? But thanks to Meta’s breakthrough, Brain2Qwerty1, that future is closer than you think.

In this article, I will explain how BCI (brain-computer interface) not only deciphers neural signals but also promises to revolutionize communication, especially for those who face challenges with conventional speech and typing.

How does this magic happen? Let’s break it down together, from the big-picture concept to the nitty-gritty technical details.

Table of Contents

Brain2Qwerty: The coordinator of your orchestra

Technical explanation of Brain2Qwerty

Next steps

Conclusions

1. Brain2Qwerty: The coordinator of your orchestra

Picture your brain as an orchestra, with every section playing its part to create the symphony of your thoughts. When you decide to speak or write, it’s like every instrument comes together in harmony. Now, imagine Brain2Qwerty as the brilliant conductor who reads this score and effortlessly turns it into text.

Meta’s study had volunteers (the unsung heroes) type out sentences while their brain activity was recorded in a completely noninvasive way - no drilling required! By training a smart model on these signals, researchers managed to decode thoughts into text.

The results showed that the model can accurately decode thoughts with an average Character Error Rate (CER) of 32%. And guess what: skilled typists hit as low as 19%. It’s not just about the tech, it’s also about refining our own 'typing' skills.

Why does this matter?

This technology can revolutionize communication for people with difficulty speaking or typing due to disabilities. Imagine someone who has lost the ability to speak being able to communicate their thoughts simply by thinking.

Hopefully, we will have control over what thoughts are being displayed and which are not.

Although it is still in its early stages and not ready for everyday use, Brain2Qwerty is paving the way for a future where our minds can directly communicate with machines, transforming how we interact with one another.

2. Technical Explanation of Brain2Qwerty

Now that we understand how Brain2Qwerty orchestrates thought-to-text translation, let's examine the technology behind it.

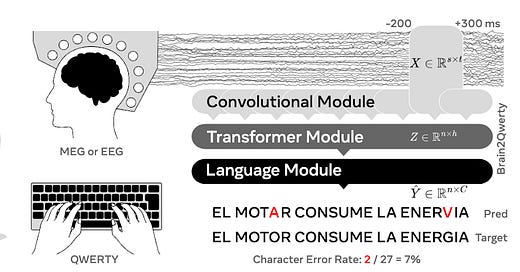

Meta’s Brain2Qwerty represents significant advancement in brain-computer interface (BCI) technology, utilizing deep learning techniques to decode language from brain activity. The research focuses on noninvasive methods using MEG (magnetoencephalography) and EEG (electroencephalography) to capture neural signals associated with language production.

How did they do this?

The study involved 35 healthy participants who were tasked with typing memorized sentences while their brain activity was recorded. Researchers collected extensive datasets over approximately 20 hours per participant, allowing for robust training of the model.

The Brain2Qwerty architecture has three main components:

Convolutional Module: This extracts spatial and temporal features from the raw brain signal data. Convolutional layers are great at capturing localized patterns in time-series data, which is crucial for identifying neural signatures associated with different letters and words.

Transformer Module: Following feature extraction, the transformer module processes these features using self-attention mechanisms that allow the model to weigh the importance of different parts of the input data contextually. This is essential for understanding linguistic structures and maintaining coherence in sentence generation.

Language Model Module: Finally, a pre-trained character-level language model refines the output predictions. This module leverages existing linguistic knowledge to correct errors and enhance the accuracy in translating neural signals into coherent text.

Was it worth it?

It was definitely worth it, the model achieved an average CER (character error rate) of 32% when decoding from MEG data, significantly outperforming EEG data, which had a CER of 67%.

Notably, skilled typists demonstrated exceptional performance with CERs as low as 19%. These results indicate that Brain2Qwerty can effectively capture the hierarchical nature of language production within the brain.

3. Next steps

While current implementations are limited by equipment size and cost (approximately $2 million), this research lays the groundwork for future developments in noninvasive BCIs.

The findings suggest that our brains process language hierarchically, from entire sentences down to individual letters and provide valuable insights into cognitive linguistics.

Brain2Qwerty exemplifies how integrating advanced AI with neuroscience can lead to innovative solutions for communication challenges faced by individuals with speech impairments.

As research progresses, we may see practical applications that enable seamless communication between humans and machines through thought alone.

4. Conclusion

Brain2Qwerty represents a significant advancement in merging technology and neuroscience. By translating our thoughts into text, this innovation will have revolutionary applications in communication, particularly for those who have struggled with conventional methods due to physical limitations.

As research advances and technology becomes more accessible, we could soon witness a world where our minds communicate as effortlessly as we think.

The journey from thought to text has only just begun, and the possibilities are as boundless as the human imagination.

👇👇👇

If you enjoyed reading this article, consider subscribing to our FREE newsletter.